TL;DR

This post explores how principles of holography, quantum computing, and neural networks might share a common foundation in interference patterns. Could these parallels help us better understand AI, data storage, and brain functions?

Introduction

I first read The Holographic Universe by Michael Talbot over 15 years ago, and its ideas have stayed with me ever since. Recently, as I delved into understanding how quantum computers work, I came across Grover’s algorithm—a remarkable quantum search algorithm. Its mechanics immediately reminded me of a story from Talbot’s book, particularly one that explores the concept of „pattern matching.“

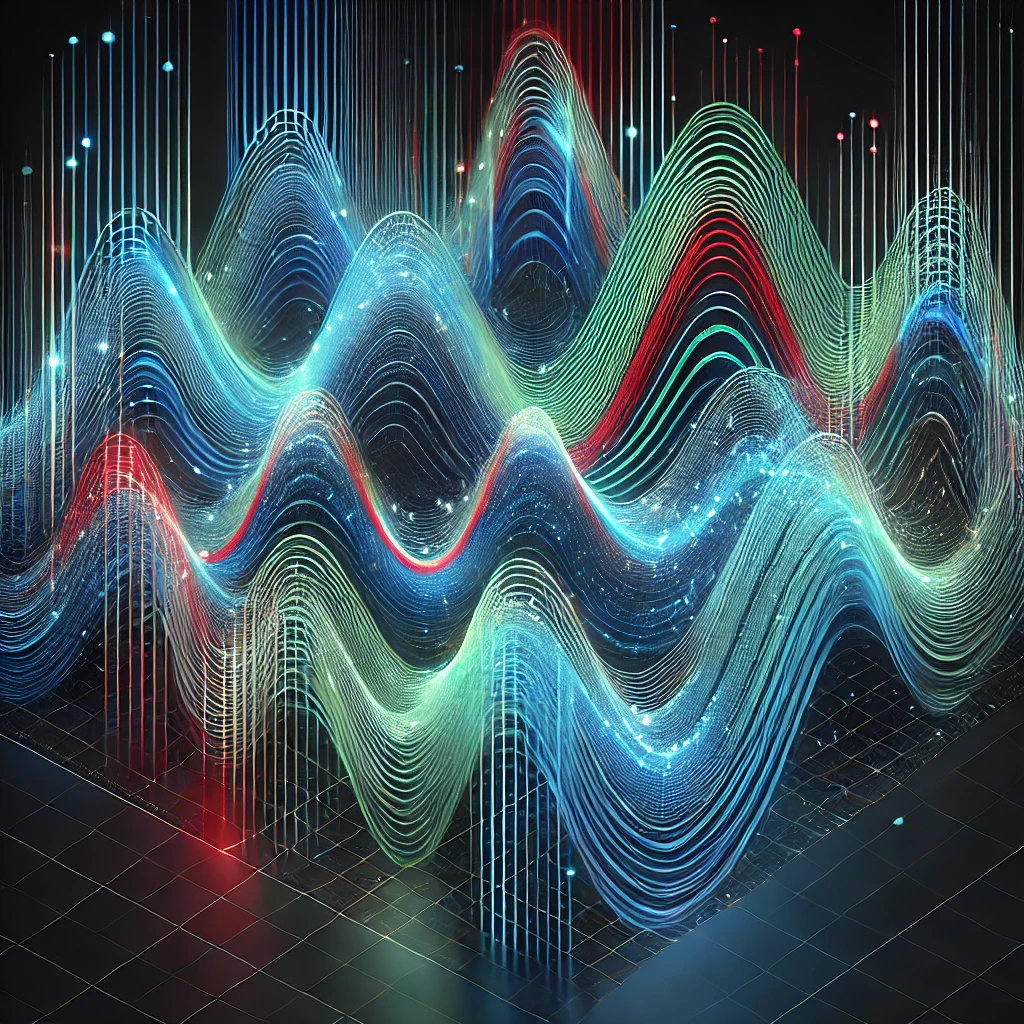

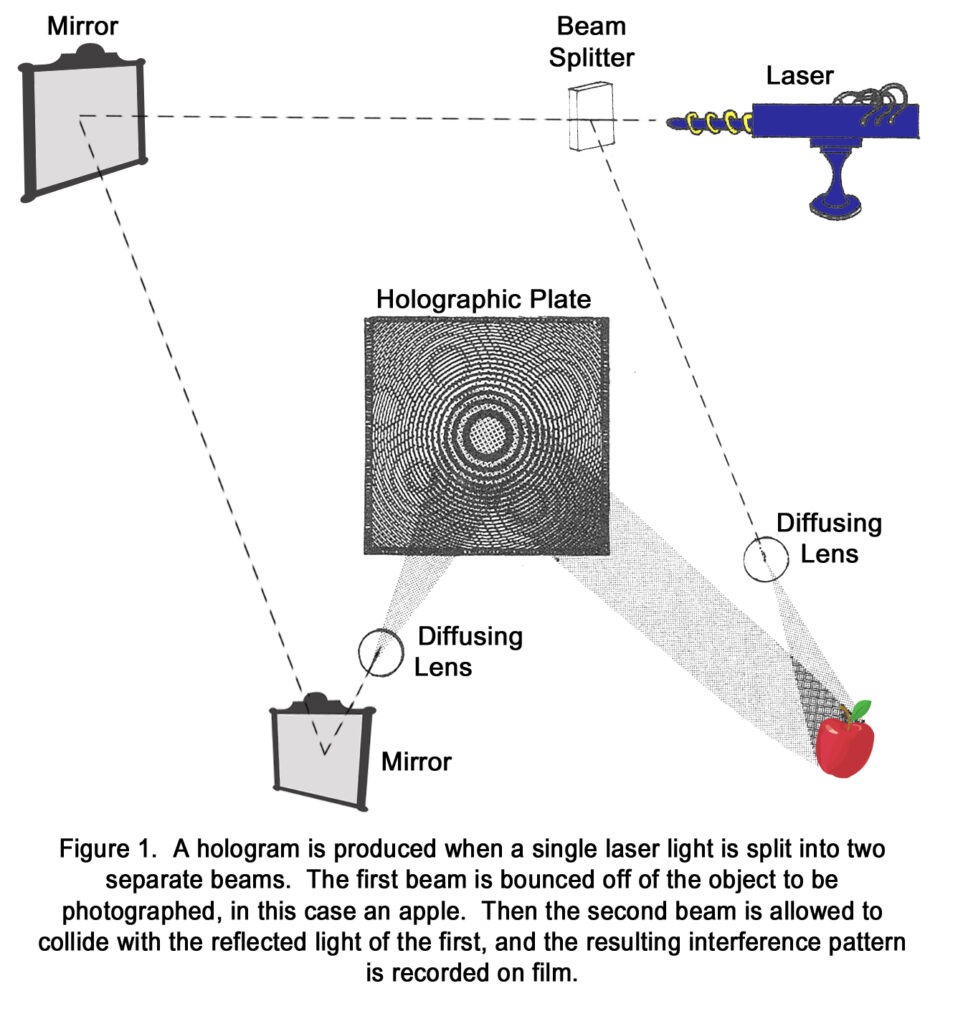

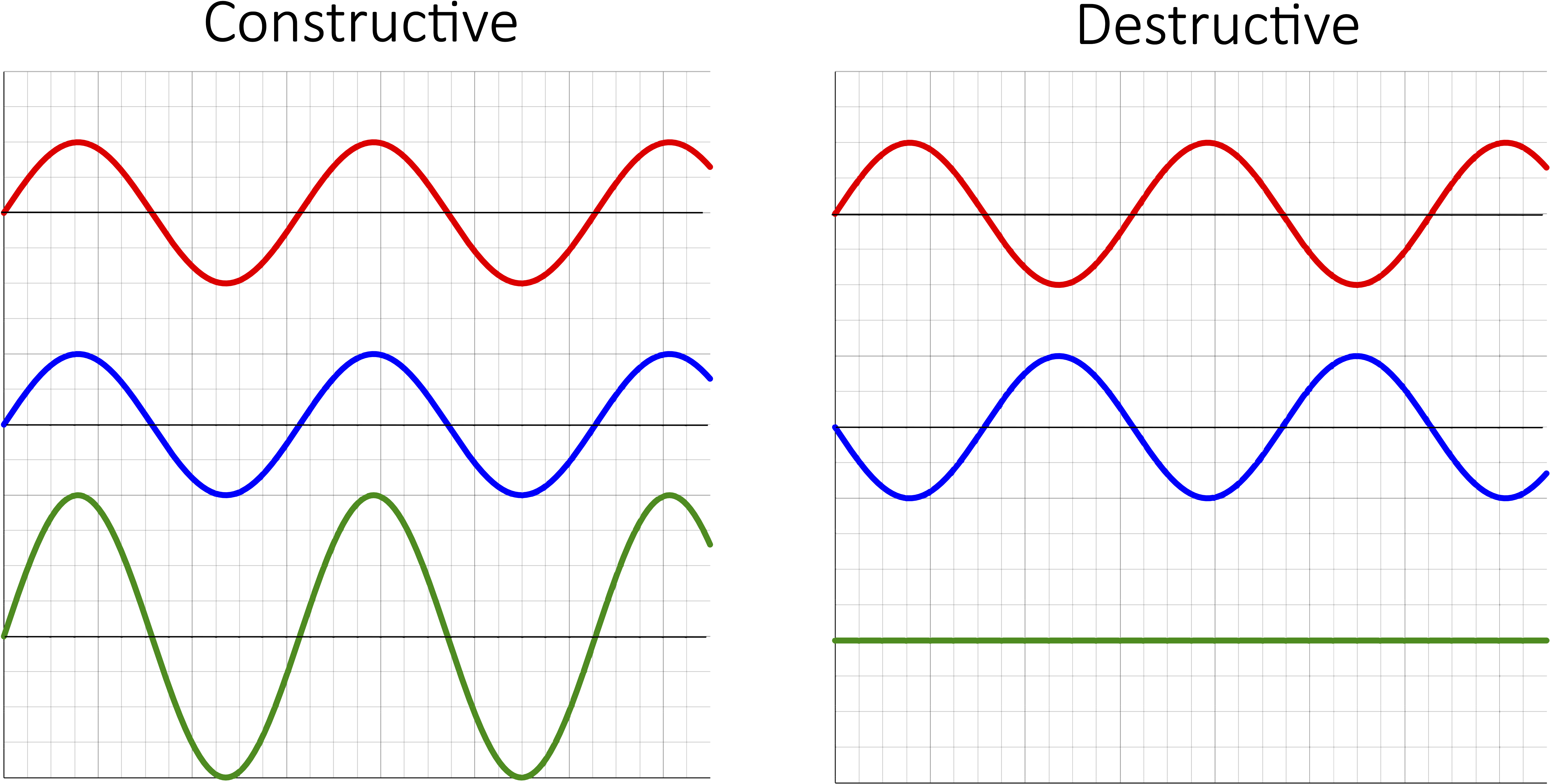

But more on that later. First we must understand what „interference patterns“ are. Have a look at the first picture:

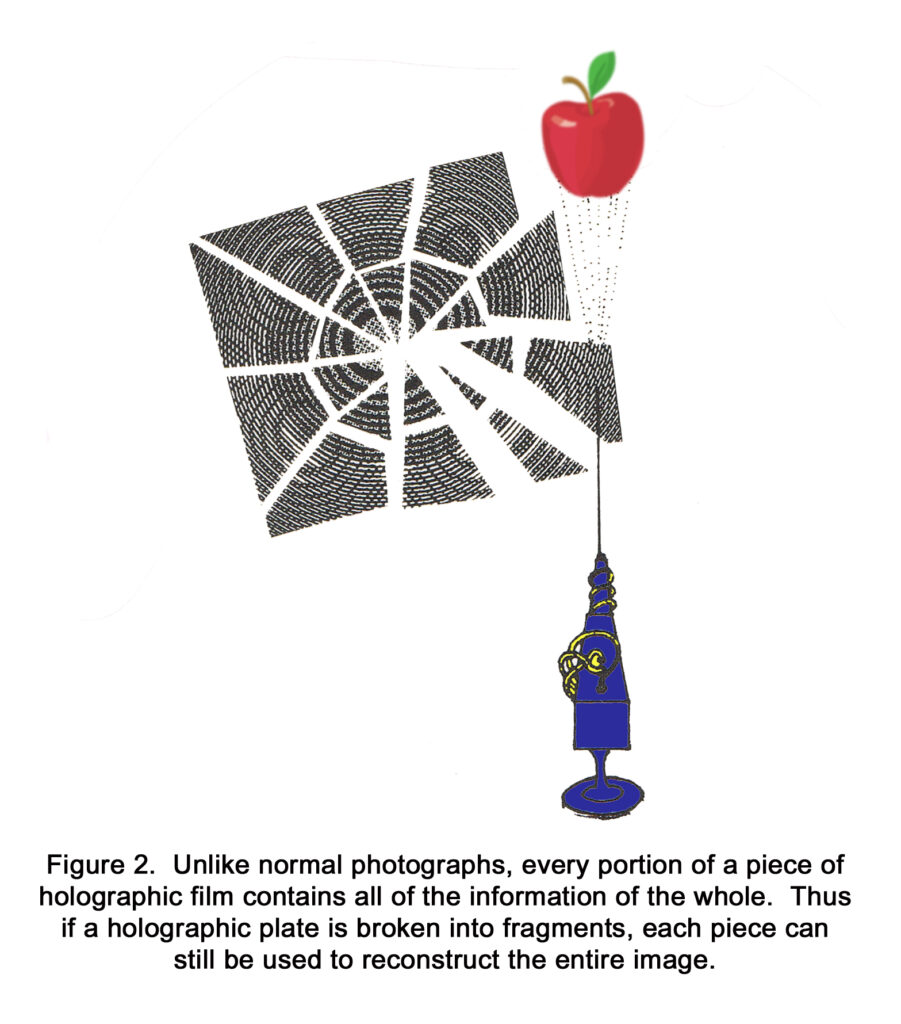

The beam splitter splits the light source into two beams. One is the object beam (here hitting the apple) and the other is the reference beam (bouncing of the mirrors). They are combined and create a interference pattern. The interesting thing is: Every part of the „interference pattern“ contains the hole information of the apple, shown in the next image:

Now lets have a look at page 22 and 23:

„OUR ABILITY TO RECOGNIZE FAMILIAR THINGS“

At first glance our ability to recognize familiar things may not seem so unusual, but brain researchers have long realized it is quite a complex ability. For example, the absolute certainty we feel when we spot a familiar face in a crowd of several hundred people is not just a subjective emotion, but appears to be caused by an extremely fast and reliable form of information processing in our brain.In a 1970 article in the British science magazine Nature, physicist Pieter van Heerden proposed that a type of holography known as recognition holography offers a way of understanding this ability.* In recognition holography a holographic image of an object is recorded in the usual manner, save that the laser beam is bounced off a special kind of mirror known as a focusing mirror before it is allowed to strike the unexposed film. If a second object, similar but not identical to the first, is bathed in laser light and the light is bounced off the mirror and onto the film after it has been developed, a bright point of light will appear on the film. The brighter and sharper the point of light, the greater the degree of similarity between the first and second objects. If the two objects are completely dissimilar, no point of light will appear. By placing a light-sensitive photocell behind the holographic film, one can actually use the setup as a mechanical recognition system.

A similar technique known as interference holography may also explain how we can recognize both the familiar and unfamiliar features of an image such as the face of someone we have not seen for many years. In this technique an object is viewed through a piece of holographic film containing its image. When this is done, any feature of the object that has changed since its image was originally recorded will reflect light differently. An individual looking through the film is instantly aware of both how the object has changed and how it has remained the same.

Here’s how I understand it: Imagine taking a picture of a crowd dancing at a concert and creating a holographic interference pattern from it. Now, suppose you isolate a single person from that crowd and generate a second interference pattern of just that person. When you overlay the two patterns, the holographic system would highlight the selected individual within the crowd. The degree of brightness or clarity in the highlight indicates how closely the two patterns match.

This process exemplifies pattern matching. The hologram acts as a comparison tool, analyzing the interference patterns to identify similarities.

Quantum Computers

With that in mind lets look how quantum computers work (I am in no way an expert).

I understand it the following way:

Take some qubits and entangle them or perform other operations (like flipping their states). These qubits exist in superposition (a wave function) until I measure them. When measured, the wave function collapses, and voila, I can read out the resulting state of the qubits.

As a side note:

Each qubit is like a tiny Schrödinger’s cat. A lot of those cats are working together to create a quantum computer. 🙂

Let’s look at another explanation from an article on Popular Science:

„The art of doing a quantum algorithm is how you manipulate all of those entangled states and then interfere in a way that the incorrect amplitudes cancel out, and the amplitudes of the correct one come forward, and you get your answer,” Nazario says. “You have a lot more room to maneuver in a quantum algorithm because of all these entangled states and this interference compared to an algorithm that only allows you to flip between zeroes and ones.“

So, what is happening here?

Initially, a quantum computer functions like an analog computer, creating interference patterns or wave functions using qubits. Constructive interference (when waves are in phase) results in higher amplitudes, while destructive interference (when waves are out of phase) leads to lower amplitudes. These amplitudes can then be measured.

Here is my perspective:

Quantum computers leverage the superposition of states and interference to highlight the most probable outcomes. The probability distribution of a quantum state emerges from the constructive and destructive interference of its wave function, amplifying correct solutions while canceling out incorrect ones. Similarly, interference patterns encode and distribute information holistically, allowing the retrieval of specific data by amplifying the desired patterns. This connection suggests that the underlying principle of quantum computation—the manipulation of probabilities through interference—may find parallels in other systems, such as neural networks or even advanced optical devices.

Neural networks

Neural networks are often considered black boxes. A typical neural network consists of an input layer, an output layer, and multiple hidden layers in between. Each layer is made up of neurons, which use specific activation functions (such as ReLU). These neurons are connected to one another through weights or parameters. During the learning phase, these weights are adjusted in a process called backpropagation, usually using gradient descent. The goal is to minimize the error rate by comparing the network’s output to the expected output and improving its performance.

Researchers are striving to uncover the internal workings of neural networks, a crucial step toward enhancing AI transparency, ensuring safety, and achieving alignment with human values.

CNN – Convolutional neural network

CNNs are widely used for computer vision and image classification, among other applications. A convolution is a mathematical operation, similar to addition or multiplication. 3Blue1Brown has an excellent video that explains this operator in detail.

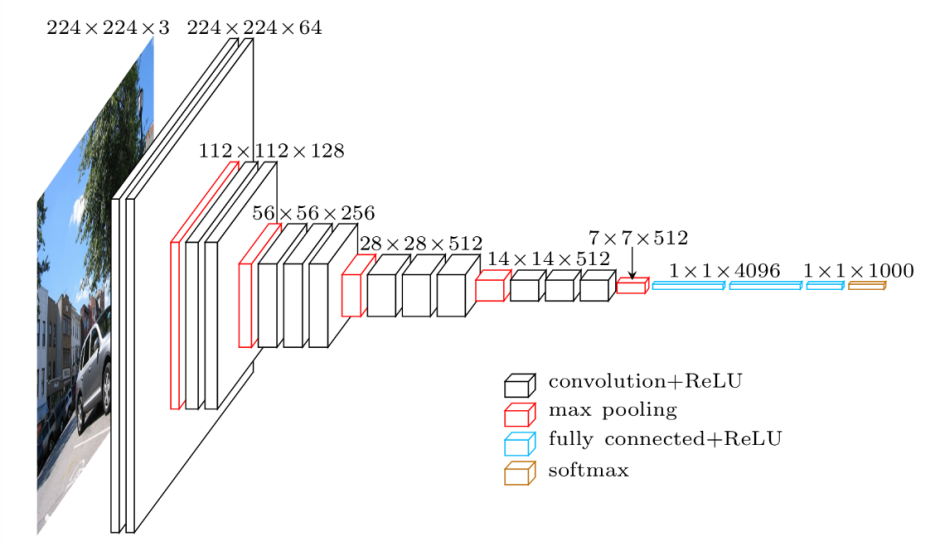

Here is an image of the VGG-16 architecture from 2014:

Also have a look at the video „CNN: Convolutional Neural Networks Explained – Computerphile“ by Computerphile.

I made a screenshot of the video at minute 9:46:

The image shows how a feature is spead over an entire range of layers.

Okay, if you’ve followed me this far, you might be wondering: CNNs are great, but what’s your point? I asked ChatGPT to help me with an answer:

All three systems (neural networks, quantum computers and holography) leverage principles of distributed information processing, where the whole system contributes to solving complex problems. They encode, transform, and amplify key patterns to highlight desired outcomes, whether it’s reconstructing a holographic image, finding a quantum state, or classifying an object in a neural network.

My perspective is as follows:

A neural network’s parameters and activation functions can be seen as forming a complex interference pattern.

Highlighting the Core Idea

1. In an article „Researchers demonstrate pattern recognition using magnonic holographic memory device“ on phys.org from 2015 the researchers claim:

„(…) have successfully demonstrated pattern recognition using a magnonic holographic memory device, a development that could greatly improve speech and image recognition hardware.“

„The most appealing property of this approach is that all of the input ports operate in parallel. It takes the same amount of time to recognize patterns (numbers) from 0 to 999, and from 0 to 10,000,000. Potentially, magnonic holographic devices can be fundamentally more efficient than conventional digital circuits.“

„We were excited by that recognition, but the latest research takes this to a new level,“ said Alex Khitun, a research professor at UC Riverside, who is the lead researcher on the project. „Now, the device works not only as a memory but also a logic element.“

2. In an „Nature“ article from 2003 called „The light fantastic“ holography can be used as an enormous data storage. Here is a quote:

„Van Heerden’s technique is known as holographic data storage, as the method for storing the pages is similar to that used to create holograms — the different pages of data are analogous to the different views of an object that are stored in a hologram. A whole page of data is read or written at once — conventional memories read data bit by bit — so retrieval speeds are higher. And the angular tuning required to retrieve different data pages can be controlled by mirrors that can be deformed by electric signals, eliminating the need for mechanical parts — another benefit when speed is of the essence.“

Interference patterns can store large amounts of data!

3. In an article by „Guohai Situ“ called „Deep holography“ from 2022 he states: „On the one hand, DNN has been demonstrated to be in particular proficient for holographic reconstruction and computer-generated holography almost in every aspect.“

So they use DNN (deep neural networks) to generate interference patterns. And those networks are very good for the task.

In his „Conclusion and perspective remarks“ he writes:

„The capability of light-speed processing in parallel of holographic neural networks indeed guarantees tremendous inference power, even outperforming Nvidia’s top of the line Tesla V100 tensor core GPU in certain task“

4. The most important articles are from Anthropic. One is called: „Scaling Monosemanticity: Extracting Interpretable Features from Claude 3 Sonnet„. The other: „Toy Models of Superposition„.

Superposition hypothesis

„We believe that many features in large models are in “cross-layer superposition”. That is, gradient descent often doesn’t really care exactly which layer a feature is implemented in or even if it is isolated to a specific layer, allowing for features to be “smeared” across layers.“

„“Superposition,” in our context, refers to the concept that a neural network layer of dimension N may linearly represent many more than N features. The basic idea of superposition has deep connections to a number of classic ideas in other fields. It’s deeply connected to compressed sensing and frames in mathematics – in fact, it’s arguably just taking these ideas seriously in the context of neural representations. It’s also deeply connected to the idea of distributed representations in neuroscience and machine learning, with superposition being a subtype of distributed representation.“

Maybe an explaination could be:

The concept of superposition in neural networks mirrors the principle of interference patterns. Just as interference patterns store overlapping waves to encode complex information holistically, superposition allows neural networks to distribute features across multiple layers and neurons.

Conclusion

I came across this concept just three days ago, and I’m still processing its implications. I’ve noticed many interdisciplinary parallels among these ideas, and perhaps you might see them too. While I’m not an expert, I hope that individuals far smarter than me can connect these dots even more effectively.

The underlying principles might be holographic, which could deepen our understanding of how our brains function and drive significant advancements in AI development.